Recent Posts

Featured Posts

Dialogical Fingerprinting last updated October 26, 2022 by Brian Plüss

Dialogical Fingerprinting

Duration: March – October 2019

People: Chris Reed (PI), Jacky Visser (PDRA), Matt Foulis (research intern)

Funder: Dstl / DASA

Project Description

How people engage with dialogue is as unique to them as their fingerprint. We show that this idea can be operationalised using state-of-the-art deep learning models. Who is speaking can be determined by how they interact; their role and attitude from the language they use.

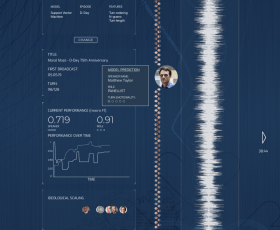

Our demonstrator system for the algorithms underpinning the Dstl-DASA Behavioural Analytics project on Dialogical Fingerprinting provides an intuitive interface to a range of data sets; of classical and deep learning AI algorithms; and of output characteristics, including speaker identity, dialogical role, emotional status and political alignment.

Select a machine learning algorithm, select the features to use, select the training data and select what properties to look for. Then lock and learn. Deep learning algorithms construct the model which is then applied to test data: an episode of BBC Radio 4’s Moral Maze. As playback continues, the model makes increasingly confident predictions about who’s who.

Key publications

Foulis, M., Visser, J., & Reed, C. (2020a). Dialogical fingerprinting of debaters. In, H. Prakken, S. Bistarelli, F. Santini & C. Taticchi (Eds.), Proceedings of COMMA 2020, 8-11 September 2020 (pp. 465-466). Amsterdam: IOS Press. DOI: 10.3233/FAIA200536

Foulis, M., Visser, J., & Reed, C. (2020b). Interactive visualisation of debater identification and characteristics. In, F. Sperrle, M. El-Assady, B. Plüss, R. Duthie & A. Hautli-Janisz (Eds.), Proceedings of the COMMA workshop on Argument Visualisation, COMMA, 8 September 2020 (pp. 1-7)